|

|

|

|

|

|

|

|

|

|

|

Our proposed method IMMA modifies pre-trained model weights $\theta^p$ with immunization methods $\mathcal{I}$ before adaptation $\mathcal{A}$, such that $\mathcal{A}$ fails to capture the target concept $\mathbf{c}'$ in the training images $\mathbf{x}'$ by training on immunized model weights $\mathcal{I}(\theta^p)$. To achieve this goal, we propose the following bi-level program: $$\overbrace{\max_{\theta_{\in\mathcal{S}}} L_{\mathcal{A}}(\mathbf{x}'_{\mathcal{I}}, \mathbf{c}'; \theta, \phi^\star)}^{\text{upper-level task}} \ \text{s.t.}\ \phi^\star = \overbrace{ \arg\min_\phi L_{\mathcal{A}}(\mathbf{x}'_{\mathcal{A}}, \mathbf{c}'; \theta, \phi)}^{\text{lower-level task}}.$$ Here, the set $\mathcal{S}$ denotes a subset of $\theta$ that is trained by IMMA. This set is a hyperparameter that we choose empirically. $\phi$ denotes the parameters that are being fine-tuned by $\mathcal{A}$.

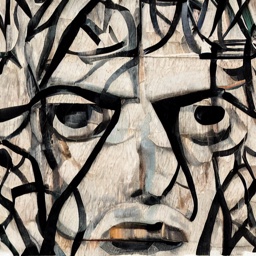

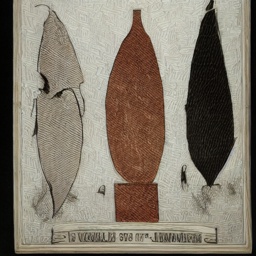

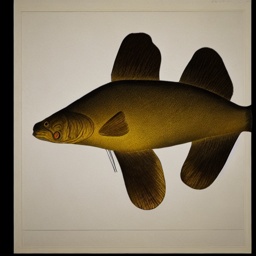

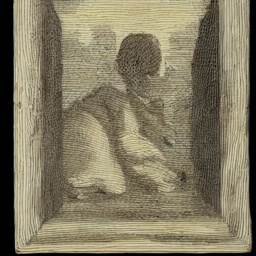

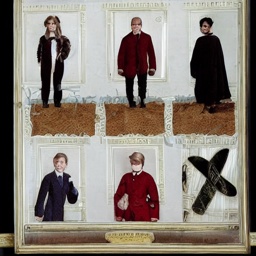

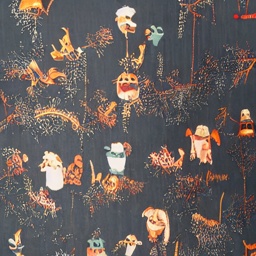

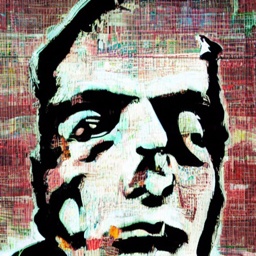

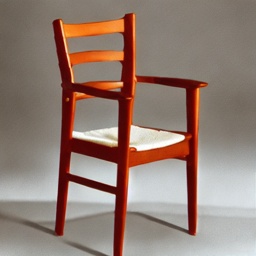

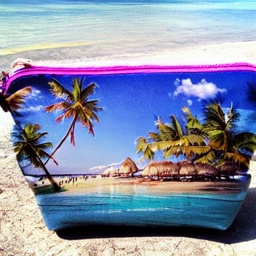

By dragging the slider bar under each block of images, the visualized images vary along fine-tuning epochs for each of the method. We show quantitative comparison between without (the 2nd row) and with (the 3rd row) our proposed immunization method (IMMA) on the personalized content setting in Sec. 5.2. As in the paper, we use the prompt "A $[V]$ on the beach" following DreamBooth. In general, you will observe that without the immunization of IMMA, the models learn the concepts in the reference images in several epochs. On the other hand, models with IMMA struggle or fail to learn the concepts.

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

Fine-tuning Epoch :

@inproceedings{zheng2024imma,

title={Imma: Immunizing text-to-image models against malicious adaptation},

author={Zheng, Amber Yijia and Yeh, Raymond A},

booktitle={European Conference on Computer Vision},

pages={458--475},

year={2025}

}